Week 04 Lecture: Generating things with ML

Contents

Week 04 Lecture: Generating things with ML¶

Generative Models and Molecular Conformational Ensambles¶

Overview for today¶

Today we will cover the following topics:

What are Generative Models?

How do people (currently) apply Generative Models in Molecular Sciences?

Particular attention on: generation of molecular conformations.

What kind of Generative Models are there?

Normalizing Flows

Variational Autoencoders (VAEs)

Generative Adversarial Networks (GANs)

What are the limits of Generative Models when generating molecular conformations?

1 - What are Generative Models?¶

What are Generative Models used for?¶

We have a series of data samples x in a training set.

There exists a probability distribution pdata for them.

We want a model that learns a distribution pmodel to approximate pdata.

Once our model is trained, we want to:

Sample from pmodel.

Always possible.

Compute pmodel(x) for arbitrary samples.

Not always possible.

Currently, most Generative Models are based on neural networks (NNs). Why?

Why NNs? Example of a Generative Model using a NN¶

Random 1024x1024 pixel samples from StyleGAN2 [Karras et al., 2019].

Number of dimensions: 1024x1024x3=3145728.

GAN based on a CNN architecture (with a LOT of engineering).

Training set: 70k images from Flickr.

Training time: 9 days with 8 Tesla V100 GPUs.

Try it at: https://thispersondoesnotexist.com.

Why do we need Machine Learning for Generative Modeling?¶

The machine learning toolkit allows us to build Generative Models with:

Statistically-efficient learning:

Neural networks “understand” data better than histograms.

But exactly how? Local interpolation and extrapolation abilities (even experts do not know exactly).

Computationally-efficient (or at least reasonable) training:

Time (processing) and space (memory).

Sampling quality and sampling speed.

Biophysical Sciences and complex systems¶

In Biophysical Sciences, we are interested in complex, highly dimensional systems.

Example: bacterial ribosome.

2 - How do people (currently) apply Generative Models in Molecular Sciences?¶

Molecular Sciences Applications¶

Numerous applications.

Cutting-edge field. So MUCH remains to be done!

Some examples:

Generate new molecular formulas (e.g.: drugs).

In case you are interested: we will just discuss quickly and will give some references.

Model the distributions of molecular conformations.

We will talk about this a lot in the rest of the lecture!

The field is still in its infancy.

Lot of room for improvement and exploring new methods.

Generate new molecular formulas¶

Basic principle.

Find some way to encode molecular formulas in x.

Collect a dataset of molecules of interest.

Train a Generative Model (may also use Reinforcement Learning in the process).

Sample from it (generate new molecular formulas).

Generate new molecular formulas: examples¶

One of the pioneering articles in the field: MolGAN: An implicit generative model for small molecular graphs (link).

One success story of deep learning and drug development: Deep learning enables rapid identification of potent DDR1 kinase inhibitors (link)

Commentary on the state of the art in this field: Can Generative-Model-Based Drug Design Become a New Normal in Drug Discovery? (link)

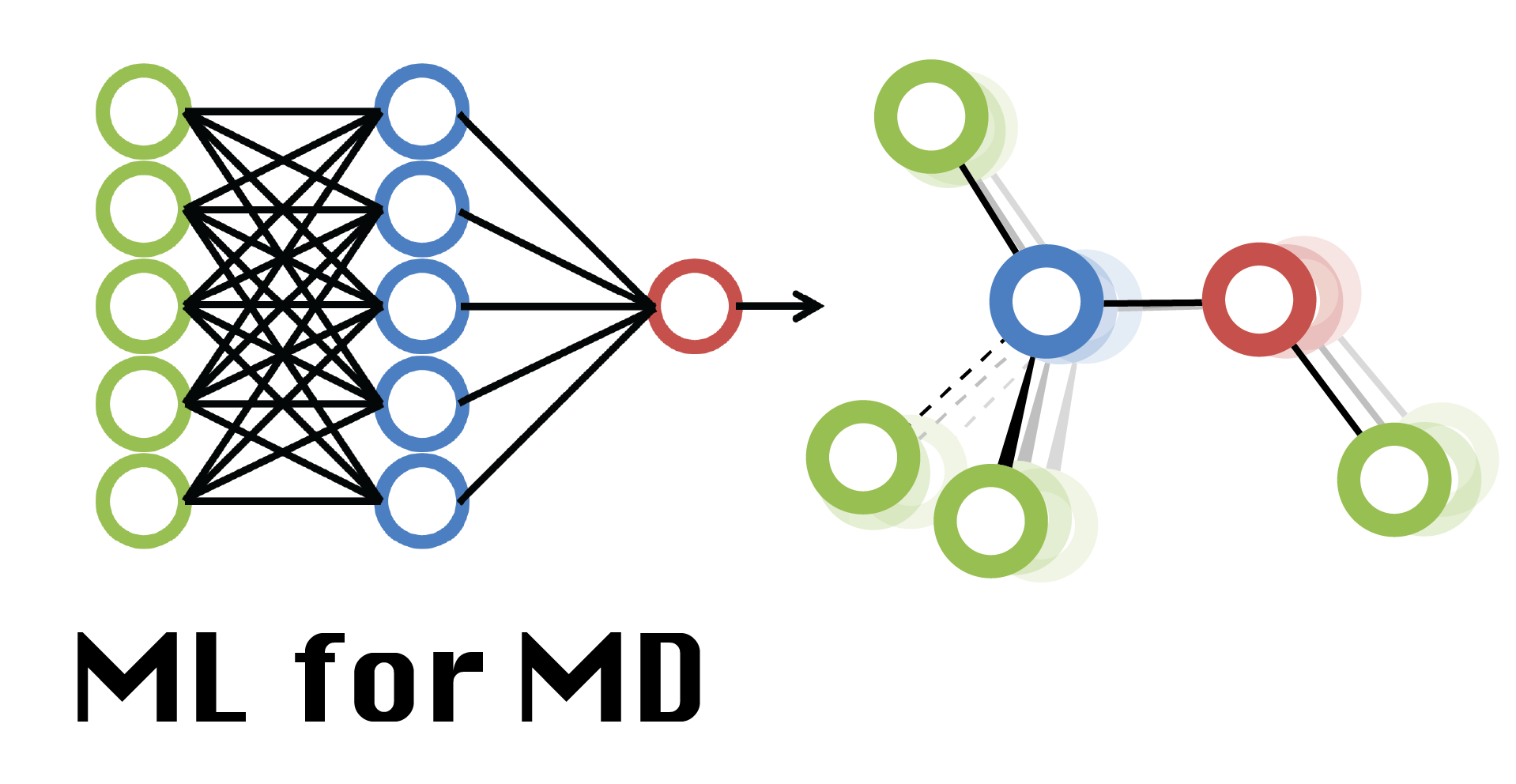

Model the distributions of molecular conformations¶

Sampling the conformations of a molecular system using “classical” approaches:

Using molecular dynamics (MD) or Monte Carlo-based methods we can sample the distribution of its conformations.

MD is a very powerful method, but many iterations are need to sample the whole distribution. We need a lot of computing power.

Machine learning “promise”:

Can we train a generative model that learns the distribution of conformations of molecular systems?

Can we sample from these distribution faster than “classical” methods?

What do we need to train a generative model for doing that?

Training data: conformations from “classical” methods (MD). How much? Open question.

How to best describe x, our conformations? It’s an open question:

xyz coordinates.

Internal coordinates.

Train a generative model. Which one? Another open question.

Molecular representation: xyz coordinates¶

Pros: all the geometric information we need.

Cons: most NNs are not SE(3) invariant.

Whichever translation or rotatation of our training data, the network should always learn the same thing!

We must rely on clever tricks to remove translational or rotatational degrees of freedom.

NNs with SE(3) invariances exists, but there is yet no consensus on which one works best, in practice. Active area of research.

Optional: works with some E(3) and SE(3) invariant NNs.

Molecular representation: coordinates internal coordinates¶

E.g.: distance matrices.

Pros: their computation is SE(3) invariant.

Cons: not straightforward to go back to xyz coordinates.

3 - What kind of Generative Models are there?¶

Common characteristics of Generative Models in today’s lecture¶

All these models:

Learn from data.

Use neural networks (NNs) as functions approximators.

Common NN architectures are used. You saw many of them before.

These NNs are trained with batches using gradient-descent.

The differences from supervised learning are:

Input and output of the NNs.

Loss function that we use.

Generate samples by randomly drawing from a simple prior (e.g.: normal distribution).

The networks will map noise z to some object x.

In other words: it’s extremely easy to sample from pmodel!

Can be used as unconditional or conditional models.

Unconditional Generative Models¶

They learn to approximate pdata(x).

In the figure: random samples from Image GPT.

Conditional Generative Models (very useful in Molecular Sciences)¶

They learn to approximate pdata(x | y).

What is y? Typical example: a class.

In the image: class-conditioned samples from [Miyato et al., 2018].

Actually, y in pdata(x | y) can be any variable:

Parameters of the system (e.g: temperature, pH, etc…).

Topology (e.g: molecular formula, amino acid sequence, etc…).

Entire data sample (e.g: conformation at previous time step).

Anything you can think of…

Conditional models allow us to control what to generate.

Example from [Simm and Hernández-Lobato, 2019]:

They use a Variational Autoencoder to model a distribution pdata(x | y).

y: graph representing a molecule.

x: molecular conformation.

If we train with many different molecules (we cover some significant part of the chemical space): allows us to generalize and generate conformations for molecules unseen in training data!

Normalizing Flows¶

How to learn distributions: maximum likelihood principle¶

Maximum likelihood estimation: important method in statistics.

Normalizing flows (and other generative models) use it.

Basic principle: our model (with parameters \(\theta\)) should assign high probability to the examples in the training set (image from the NIPS 2016 Tutorial on GANs).

A maximum likelihood-based loss:

\(L = -\frac{1}{n}\) \(\sum_{i=1}^{n} log(p_{\theta}(\boldsymbol{x_i}))\)

Here pmodel = p\(\theta\), we just want highlight that the likelihood depends on \(\theta\).

Train the model: differentiate the loss with respect to \(\theta\) and optimze.

Optional slide: maximum likelihood and KL divergence¶

Maximizing the likelihood asymptotically corresponds to minimizing the Kullback-Leibler divergence between pdata and pmodel (proof here).

Optional slide: a neural network to model pdata directly?¶

Suppose our data x is continous (e.g.: xyz coordinates or distances).

Any NN is a function approximator \(f(\boldsymbol x; \theta)\).

So why not use NNs to model pdata by training with maximum likelihood? The idea is:

\(p_{model}(\boldsymbol x) = f(\boldsymbol x; \theta)\)

Will not work.

Probability density functions (pdf) are normalized.

\(\int_{-\infty}^\infty p_{model}(x)dx = 1\)

\(p_{model}(x) \geq 0 \quad \forall x\)

NNs are not normalized… will not learn a true pdf.

Training outcome: a powerful NN will put extremely high density (tends to infinite) on training datapoints, extremely low on the rest of the dataspace.

We need a partition function… but how to evaluate?

We can still try to model energies with our NN: Energy Based models.

Normalizing flows principles¶

Start from a simple prior. E.g. multivariate normal distribution: pz = N(0, I).

We can easily sample from it a z vector.

We can also easily evaluate its pz(z).

Our model: a NN function g that maps z to x (e.g.: a conformation):

The samples x = g(z; \(\theta\)) will be distributed according to some (arbitrarly complex) distribution px.

We will use px as our pmodel. How can we compute px(x)?

We can exactly compute px(x) if g:

Is an invertible function.

We can compute the determinant of its jacobian with respect to z.

Just use the change of variable formula:

\(p_x(\boldsymbol{x}) = p_z(f(\boldsymbol{x})) |det(Df(\boldsymbol{x}))|\)

Note, we define:

f = g-1.

Df(x) is the jacobian of f(x) with respect to x.

Now we can train using maximum likelihood estimation.

\( log(p_x(\boldsymbol{x})) = log(p_z(f(\boldsymbol{x}))) + log(|det(Df(\boldsymbol{x}))|)\)

Normalizing flows in practice¶

Very elegant method, but poses a restraints on our neural network g.

g must be invertible (not difficult to obtain).

We must be able to compute det(Df(x)) and compute it efficiently (difficult to obtain).

For many of the architectures that you have seen the computation is too expensive.

How can we use our favourite architectures?

Solutions exists:

RealNVP from [Dinh et al., 2016]. Simple and clever solution (the article is recommended), but we still have to modify our overall network architecture.

FFJORD from [Grathwohl et al., 2018]. No restraints on the architectures, but computationally is slow (must also run a ordinary differential equation solver).

See this review [Kobyzev et al., 2019] for an overview of the challenges in Normalizing Flows.

Active area of research, much room for improvement.

Normalizing flows examples¶

Normalizing flows have an important application in molecular conformational ensembles modeling: Boltzmann generators [Noe et al., 2019].

Normalizing flows overview¶

Pros:

Good quality samples.

Sampling speed is good.

Allow us to compute px(x).

Very popular in conformational ensembles modeling.

Allows to build Boltzmann generators.

With new methodolical improvements, could be a very promising method.

Cons:

Put restraints on neural newtork architectures.

Not immediately easy to work with.

Limited model capacity.

Variational Autoencoders (VAE)¶

VAE overview¶

Probabilistic models. Conceptually slightly more complex than other models, but very elegant.

There are different ways of interpreting and understading VAEs.

We will give an high-level and schematic overview of the method.

Autoencoder view of VAEs.

We will skip many important details.

If you are interested in these topics:

Encourage you to understand it more deeply.

Introductory blog post: link.

Extremely well-done lecture on VAEs: video.

From the Berkley CS294-158-SP20 course on generative modeling. Check the other lectures too!

Introductory review [Doersch, 2016].

Also try the original paper [Kingma and Welling, 2013].

Autoencoders¶

A Encoder NN (E) takes as input some x from the training set.

It compresses it to some lower dimensional z (in a latent space).

A Decoder NN (D) takes z and tries to reconstruct x.

How to train? We use a reconstruction loss. Example L2 loss:

\(L_{reconstruction} = ||\boldsymbol{x} - \boldsymbol{x'}||^2\)

We can not easily use vanilla autoencoders as generative models¶

Generative modeling idea:

Train an autoencoder.

Sample from the latent space and reconstruct with the Decoder.

Problem: how to sample from the latent space?

Randomly sample from a uniform distribution? Will not work. Most samples will be “junk”.

Why: the distribution of points in the latent space is irregular. The Encoder “decided” it! Example from this blog post.

We need some form of regularization: VAEs¶

VAE solution: we force the points in the latent space to be distributed according to some simple pz (e.g.: normal). Image from [Kingma and Welling].

VAE solution: modify the loss we use to train our autoencoder.

\(L_{tot} = L_{reconstruction} + L_{regularization}\)

In VAEs, we use as Lregularization the Kullback-Leibler divergence between q\(\phi\)(z | x) (our probabilistic encoder) and pz(z) (our simple prior).

Why are they called variational?¶

VAEs are probabilistic models! They were derived using a variational approach.

If we treat them as such, we can find out (see the links in previous slides) that their objective is:

where, on the right side, the first term is the regularization objective and the second is the reconstruction objective.

where, on the right side, the first term is the regularization objective and the second is the reconstruction objective.

And that:

Therefore in VAEs we attempt to optimize a variational lower bound of the likelihood of training data points.

For this reason, their objective is also called ELBO (Evidence lower bound).

Formulas are from [Kingma and Welling, 2013].

Examples in conformational ensembles modeling¶

Example from [Simm and Hernández-Lobato, 2019]:

We saw it before when discussing about conditional models!

It uses a VAE.

Good starting point to start studying conformational ensembles modeling and Generative Models.

Example from [Rosenbaum et al., 2021]:

Proof of concept study in which a VAE is used to model the distribution of 3D conformations of a protein that underlies the micrographs in a Cryo-EM experiment.

VAEs summary¶

Seems to be the go-to choice of many machine learning practitioners.

Pros:

Easy-to-use and flexible.

Training is (usually) stable and quick.

Many applications in Molecular Sciences.

Cons:

A good theoretical understanding is necessary to get the most ouf of them (could also be in the “pros” list).

Not great sample quality (need improvements over the vanilla implementation).

Generative Adversarial Networks¶

History of GANs¶

From the original GAN article [Goodfellow et al., 2014] (highly recommend reading it).

From an article some years later [Karras et al., 2019] (the first version of StyleGAN).

GAN overview¶

In GANs, we have two networks:

Generator (G):

Input: noise z. Output: samples x.

Discriminator (D):

Input: samples x. Output: probability of x being “real” (from training set) or “fake” (from the generator).

Both are trained in an adversarial game.

Image from Overview of GAN Structure from developers.google.com.

GAN objective¶

Let’s take a look at the GAN objective:

D(x): probability (a scalar from 0 to 1) of a sample x to be real.

G(z): a generated sample x.

The D network wants to:

Maximize D(x).

Minimize D(G(z)).

The G network wants to:

Maximize D(G(z)).

Note: we never know how to compute of pmodel(x)! We can only sample from it via x = G(z).

GANs are implicit density models.

Under ideal conditions, the global optimum of this objective will result in:

pmodel = pdata

For proof, see the original article (“Theoretical Results” section).

But we never have ideal conditions:

We train with batches.

We do not have a perfect optimization algorithm.

G and D have limited capacities.

So… only good approximations of pdata if we are careful enough (see next slide).

GAN training is notably instable¶

A common problem is mode collapse (see example below from the NIPS 2016 Tutorial on GANs).

How to improve training? People have found several solutions that seem to work well:

WGAN: changes the GAN loss, try to approximate Wasserstein distance. See [Arjovsky et al., 2017] and [Gulrajani et al., 2017].

Spectral normalization: regularizes the discriminator. See [Miyato et al., 2018].

And many others (active area of research).

All modern GANs use one of these modifications.

GAN summary¶

Pros:

Usually considered the method with best sample quality.

Diffusion Models are catching up.

Once trained, they have very fast sampling capabilities.

In principle, we can use any NN architecture for G and D.

Cons:

Unstable training.

Stabilizing may require a LOT of hyper-parameter tuning.

Usually, training is computational expensive (must update D and then G each mini-batch).

So far, not many examples in conformational ensembles modeling.

How to choose our Generative Model?¶

Some Guidelines on how to Choose our Model¶

Name |

Loss |

Explicit Likelihood |

Training stability |

Sampling speed |

Sample quality |

|---|---|---|---|---|---|

VAE |

ELBO |

Can be estimated |

Good |

Fast |

Average |

Normalizing Flows |

Maximum Likelihood |

Yes |

Good |

Average |

Good |

GANs |

Adversarial Loss (or others) |

No |

Bad |

Very Fast |

Very Good |

Note: please take cum grano salis! Part of the table is based on clichés. Things will vary with time (e.g.: methodological improvements) and on details of your project and implementation. This table is partly inspired by a table here [Bond-Taylor et al., 2021].

First narrow down our selection. What do we care about?

Fast sampling?

High-quality samples?

Exact evaluation of pmodel(x)?

Amount of fine-tuning (i.e.: time you want to invest in fixing the model)?

Start from what other people have been doing.

Method development (inventing entirely new things) in machine learning is not easy.

Trial and error process: often requires a lot of computational resources.

Empirically evaluate different choices.

In machine learning, that is what people do.

When it works, start introducing some modifications of ours!

4 - What are the limits of Generative Models when generating molecular conformations?¶

Modeling conformational ensembles: what can we learn?¶

We have a true data generating distribution µ.

In MD studies this can often be a Boltzmann distribution:

p(x) = e-E(x)/(kT) / Zx

Then we have our training set with x(1), x(2), …, x(n) = {x(i)}ni=1

Rembember, Generative Models learn from pdata, not from µ.

They do not have access to µ.

What if we did we not sample throughly enough µ when collecting the samples?

Suppose: our system has Nstates metastable states.

In our MD simulation we sample only in one.

We train a generative model on that data only.

Will our model be able to generate samples (with the right probabilities) from other states…?

Most likely, no!

There is no free lunch, only local interpolation and extrapolation.

How to recover µ and generate entirely new conformations then…?¶

Open (and difficult) challenge.

Already accurately modeling pdata is not easy.

Start simple and then build-up.

Solutions?

Very interesting (incomplete) solution to the problem (Boltzmann Generators).

“Vanilla” generative models may be only part of the solution.

New ideas are necessary!